Release Remote Runner

Warning: This article is outdated. Please check the more recent article Release Runner installation.

Last updates:

- September 7, 2023

- Moved to Release, xl cli version 23.1.6

- Moved to k8s version 1.28.1

- Moved to remote-runner version 0.1.36

This document describes how I installed a Release Remote Runner on my local k8s cluster.

The official documentation is available here: Install Remote Runner using XL Kube

Environment:

| Component | Version | Note |

|---|---|---|

| Digital.ai Release | 23.1.6 | Release running on my laptop, port 5516 |

| xl | 23.1.6 | xl installed on my laptop |

| k8s | 1.28.1 | k8s cluster (2 nodes) running on my laptop (see my article for installation) |

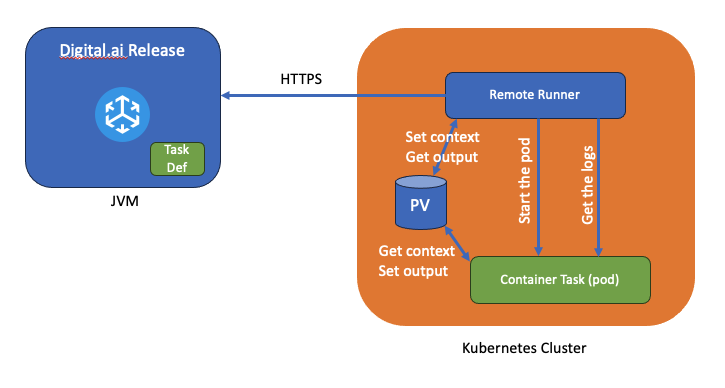

Definition: The Release Remote Runner

A Release Remote Runner is a container based application that registers itself to a Release instance. The Remote runner connects to Release and asks for job. When a task of type “Remote Task” is started, the remote runner starts an isolated pod and the task is executed. Context (input paramaters) and output are shared in a Persistent Volume. Logs are dynamically sent back to Release during the execution of the task.

TIP: If you want to see a sample Remote Task check my article Release Python SDK Sample

Step 1: Preparation

Create persistent volumes

If necessary check that you have persistent volumes available.

Two persistent volumes are needed for the Remote Runner (512Mi and 256Mi): one with ReadWriteOnce access mode and the other with ReadWriteMany access mode.

The ReadWriteMany is used to share information between the Remote Runner and the Container Task (see diagram above.)

I have created 2 persistent volumes (pv4, pv5) on my worker node:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv4

spec:

capacity:

storage: 512Mi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

local:

path: /data/volumes/pv4

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- kubenode01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv5

spec:

capacity:

storage: 512Mi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

local:

path: /data/volumes/pv5

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- kubenode01

...

Generate an access token for your Release user

The access token will be used for the connection to Release from the Remote Runner. Generate the token and keep it for use in the wizard.

Step 2: Run the wizard

Run the command to configure Remote Runner:

xl kube install

Answer the wizard questions. Here are my answers:

-------------------------------- ----------------------------------------------------

| LABEL | VALUE |

-------------------------------- ----------------------------------------------------

| CleanBefore | false |

| CreateNamespace | true |

| ExternalOidcConf | external: false |

| GenerationDateTime | 20230511-161159 |

| ImageNameRemoteRunner | xlr-remote-runner |

| ImageRegistryType | default |

| ImageTagRemoteRunner | 0.1.35 |

| IngressType | nginx |

| IsCustomImageRegistry | false |

| K8sSetup | PlainK8s |

| OidcConfigType | no-oidc |

| OsType | darwin |

| ProcessType | install |

| RemoteRunnerBaseHostPath | - |

| RemoteRunnerCount | 1 |

| RemoteRunnerReleaseName | remote-runner |

| RemoteRunnerReleaseUrl | http://10.0.2.2:5516 |

| RemoteRunnerRepositoryName | xebialabs |

| RemoteRunnerStorageClass | local-storage |

| RemoteRunnerToken | rpa_ffd201ea08e9504a5241cd4cafdd46698ef80e0e |

| RemoteRunnerUseDefaultLocation | true |

| ServerType | dai-release-runner |

| ShortServerName | other |

| UseCustomNamespace | false |

-------------------------------- ----------------------------------------------------

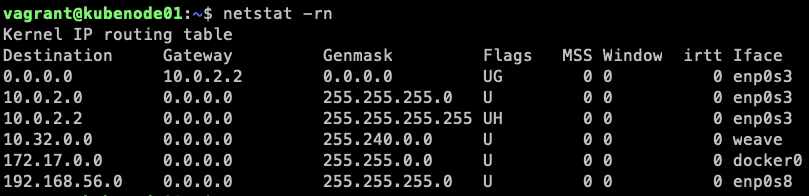

Tip: The Release URL is defined as seen from inside your k8s cluster. The URL is dependant of your configuration.

In my case, from my local k8s cluster the ip can be found using the command:

Step 3: Verification

Check for the pod status:

kubectl get pods -n digitalai

NAME READY STATUS RESTARTS AGE

remote-runner-0 1/1 Running 0 140m

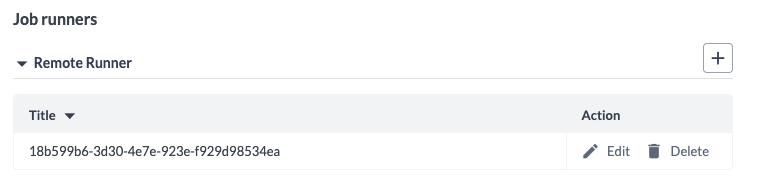

Connect to Release as an administrator and check that a new Remote Runner connection type has been created in the global Connections:

The Remote Runner is ready to execute some Release tasks.